Appearance

Sentinel

Currently, the Redis we are discussing only covers the master-slave model and eventual consistency. Readers might ponder: what happens if the master node crashes unexpectedly at 3 AM? Should we just wait for the operations team to get out of bed, manually switch from master to slave, and then notify all programs to change their addresses and restart? Undoubtedly, such manual operations are inefficient; it could take at least an hour to recover from the incident. In a large company, such an incident could easily make the news.

Thus, we must have a high-availability solution to withstand node failures, enabling automatic failover from master to slave when a fault occurs, allowing programs to run without restarting, while operations can continue to sleep as if nothing has happened. The official Redis solution for this is Redis Sentinel.

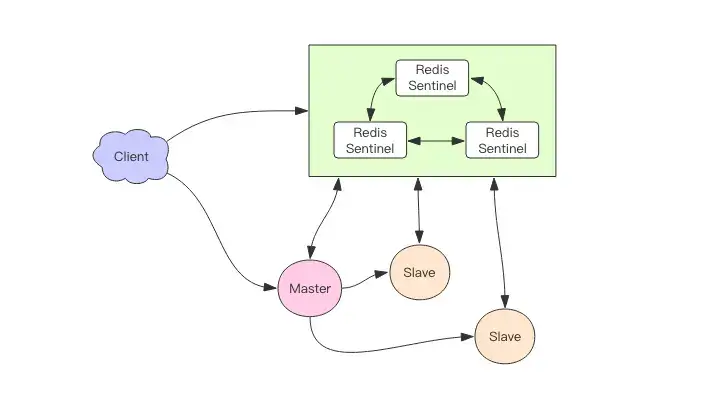

We can think of a Redis Sentinel cluster as the heart of high availability, similar to a ZooKeeper cluster. It usually consists of 3 to 5 nodes, so that even if a few nodes go down, the cluster can still operate normally.

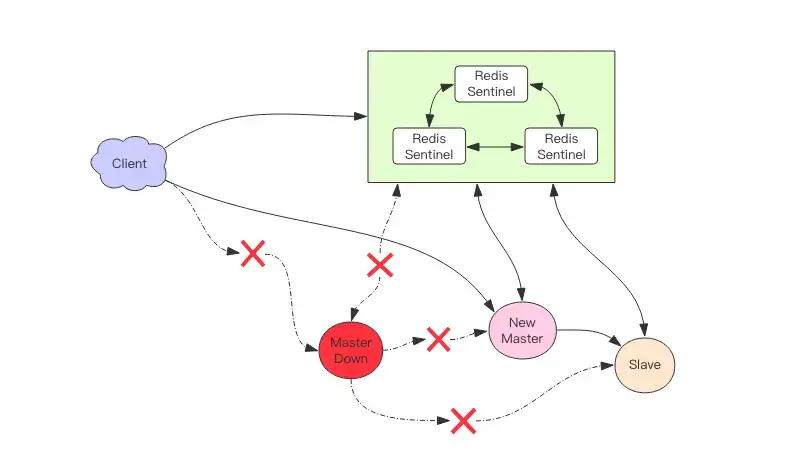

It continuously monitors the health of the master and slave nodes, and when the master node goes down, it automatically selects the optimal slave node to become the new master. When clients connect to the cluster, they first connect to Sentinel, which queries the address of the master node, and then connects to the master node for data interaction. When the master node fails, clients will request the address from Sentinel again, which will provide the latest master node address. This way, applications can complete node switching automatically without needing a restart. For example, after the master node goes down, the cluster might automatically adjust to the structure shown in the diagram below.

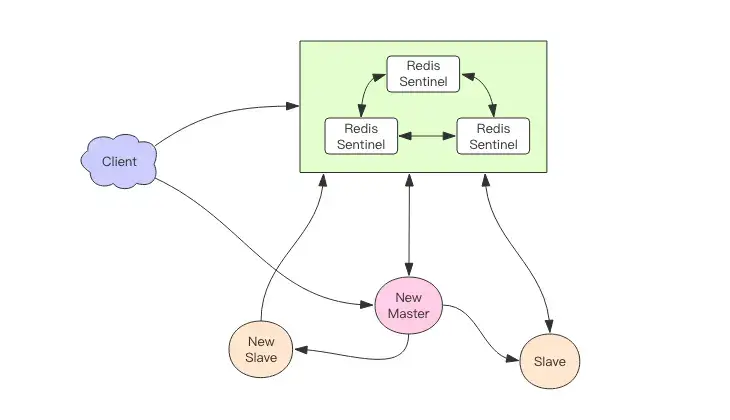

From this diagram, we can see that the master node has failed, the previous master-slave replication has been interrupted, and clients have also disconnected from the damaged master node. The slave node is promoted to the new master, and the other slave nodes begin to establish replication relationships with the new master. Clients continue interacting through the new master node. Sentinel will keep monitoring the previously failed master node, and when it recovers, the cluster will adjust to the diagram below.

At this point, the previously failed master node has now become a slave node, establishing a replication relationship with the new master.

Message Loss

Redis master-slave uses asynchronous replication, meaning that when the master node goes down, the slave nodes may not have received all the synchronized messages, resulting in lost messages. If the master-slave delay is particularly large, the amount of lost data may be substantial. Sentinel cannot guarantee that messages will not be lost, but it strives to minimize message loss. It has two parameters to limit excessive master-slave delay:

- min-slaves-to-write 1

- min-slaves-max-lag 10

The first parameter indicates that at least one slave node must be performing normal replication; otherwise, write services will stop, resulting in loss of availability.

What constitutes normal replication versus abnormal replication is controlled by the second parameter, measured in seconds. It indicates that if no feedback is received from the slave node within 10 seconds, it means that the slave's synchronization is abnormal, either due to network disconnection or lack of feedback.

Basic Usage of Sentinel

Next, let’s see how clients use Sentinel. The standard process should allow clients to discover the addresses of master and slave nodes through Sentinel and then establish corresponding connections for data storage operations. Let's look at how the Python client does this.

python

>>> from redis.sentinel import Sentinel

>>> sentinel = Sentinel([('localhost', 26379)], socket_timeout=0.1)

>>> sentinel.discover_master('mymaster')

('127.0.0.1', 6379)

>>> sentinel.discover_slaves('mymaster')

[('127.0.0.1', 6380)]The default port for Sentinel is 26379, which is different from Redis's default port 6379. The discover_xxx methods of the sentinel object can be used to find the master and slave addresses; there is only one master address, but multiple slave addresses are possible.

python

>>> master = sentinel.master_for('mymaster', socket_timeout=0.1)

>>> slave = sentinel.slave_for('mymaster', socket_timeout=0.1)

>>> master.set('foo', 'bar')

>>> slave.get('foo')

'bar'Using the xxx_for method, a connection can be drawn from the connection pool. Since there are multiple slave addresses, the Redis client employs a round-robin strategy for accessing the slave addresses.

One issue is: how does the client know when the address changes during a master-slave switch initiated by Sentinel? By analyzing the source code, I found that redis-py performs a check for address changes when establishing connections.

When the connection pool establishes a new connection, it queries the master address and compares it with the master address in memory. If there is a change, it disconnects all connections and establishes new connections using the new address. If the old master goes down, all active connections will be closed, and new connections will use the new address upon reconnection.

However, this is not enough. If Sentinel actively switches the master-slave role and the master node is not down, but the connection to the previous master is still established, will this connection not be able to switch consistently?

Delving deeper into the source code, I discovered that redis-py also controls this at another point. That is, when processing commands, it catches a special exception called ReadOnlyError, which will close all old connections, and subsequent commands will attempt to reconnect.

After the master-slave switch, the previous master is demoted to a slave, and all modifying commands will throw a ReadOnlyError. If there are no modifying commands, while the connection may not switch, data will remain intact, so it doesn't matter if the connection doesn’t switch.

Assignment

- Try to set up your own Redis Sentinel cluster.

- Use a Python or Java client to perform some routine operations on the cluster.

- Test both active and passive switching to see if the client can switch connections normally.