Appearance

Evaluating RAG Systems

After our previous sessions, you should now have a comprehensive understanding of how to construct a Retrieval-Augmented Generation (RAG) system from scratch. With the various tools provided by LangChain, creating a functional RAG system is straightforward and can be accomplished with just a few lines of code.

However, developing a RAG system suitable for real-world production is only the beginning. Continuous evaluation and testing are necessary to adjust various components and strategies to ensure stability and response quality in an actual production environment.

Today, we will delve into an evaluation framework for RAG systems: RAGAs (Retrieval-Augmented Generation Assessment).

RAG Application Example

Before we dive into the evaluation framework, let's quickly set up a RAG application example for our later evaluation demonstration. We will use the classic speech by Steve Jobs at Stanford University in 2005 as our retrieval content.

python

from langchain.document_loaders import TextLoader

from langchain.text_splitter import CharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

from langchain_chroma import Chroma

# Load data

loader = TextLoader('./SteveJobsSpeech.txt')

documents = loader.load()

# Split data into chunks

text_splitter = CharacterTextSplitter(chunk_size=500, chunk_overlap=50)

chunks = text_splitter.split_documents(documents)

# Vectorize data embeddings

vectorStore = Chroma.from_documents(chunks, OpenAIEmbeddings())

# Create retriever

retriever = vectorStore.as_retriever()Next, we will construct a basic RAG application using the retriever we just created.

python

from langchain_openai import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.schema.runnable import RunnablePassthrough

from langchain.schema.output_parser import StrOutputParser

llm = ChatOpenAI(model_name="gpt-3.5-turbo")

template = """

You are an assistant for question-answering tasks.

Use the following pieces of retrieved context to answer the question.

If you don't know the answer, just say that you don't know.

Keep the answer concise.

Question:

-------

{question}

-------

Context:

-------

{context}

-------

Answer:

"""

prompt = ChatPromptTemplate.from_template(template)

rag_chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)RAG Evaluation Overview

Evaluation Granularity

When evaluating the performance of a RAG system, the first consideration is: what aspects should we measure and assess?

Think back to your school days. Exams had an overall score and subject-specific scores; the overall score reflected a student's overall ability, while each subject score indicated mastery in that particular field.

Similarly, for a RAG system, we can evaluate it at two levels: the overall system performance and the performance of individual module components.

- Overall Evaluation: Focuses on the output quality of the RAG system as a whole, including fluency, relevance, consistency, and accuracy of the generated text.

- Component Evaluation: Concentrates on the performance of each part of the RAG system.

A RAG system primarily does two things: retrieves data and generates answers. Therefore, we can divide it into the following components:

- Retriever Component: Responsible for retrieving data from the database and optimizing results using a series of retrieval rules for the LLM to assist in answering.

- Generator Component: Generates answers based on the context provided by the retriever and the user's input question.

Evaluation Metrics

After defining the evaluation granularity, we need to design various evaluation metrics for different levels. For instance, overall evaluation may include accuracy and completeness of answers, while the retriever component focuses on precision and recall of document retrieval.

Once we have identified the metrics and their calculation formulas, we must prepare a test dataset to assess the RAG system, allowing us to quantify its performance. This will inform further optimization efforts.

In addition to quantitative evaluations, qualitative analysis is also essential. This could involve case studies to gain insights into the system's performance in specific scenarios or user feedback to gather intuitive information about usability and satisfaction.

In this session, we will focus on quantitative evaluation.

What is RAGAs?

RAGAs is an open-source framework specifically designed for evaluating RAG systems (available on GitHub). One of its standout features is the ability to use LLMs to replace traditional human scoring, allowing for automated evaluation of RAG system performance.

For example, in a traditional evaluation model, determining answer relevance requires evaluators to assess whether the generated answer directly addresses the original question and assign a score. RAGAs generates multiple potential questions related to a given answer using an LLM and then calculates the similarity between the original question and these generated questions.

This automated evaluation approach significantly reduces labor costs and time delays, expediting the development and optimization process.

When using the RAGAs framework for evaluation, we only need to design a set of questions and corresponding standard answers; the rest can be handled by RAGAs. It acts like an intelligent grading machine: teachers prepare the exam paper and standard answers, and once students complete the exam, the system automatically generates scores.

RAGAs provides a range of evaluation metrics for comprehensive assessment at both the component and overall levels.

At the component level, we can use context_precision and context_recall to evaluate the retriever component, and faithfulness and answer_relevancy to assess the generator component.

For the overall process, we can use answer_similarity and answer_correctness as metrics to evaluate the overall performance of the RAG system.

All scores for these metrics range from [0, 1], with higher scores indicating better performance.

In the following sections, we will detail how to use the RAGAs framework and delve deeper into the aforementioned metrics.

Creating an Evaluation Dataset

To compute the scores for the aforementioned metrics, RAGAs requires the following four types of data:

- Questions: The input to the RAG system, which consists of user queries.

- Context: The information returned from the retriever, used to enhance the LLM's response.

- Answers: The actual output of the RAG system.

- Ground Truths: The "standard answers" that we have confirmed prior to evaluation.

These four types of data will form our evaluation dataset. Preparing this dataset is straightforward; we only need to design the questions and ground truths. The context and answers can be generated based on the actual retriever and the RAG system under evaluation.

Here’s how to create the evaluation dataset:

python

from datasets import Dataset

# Questions

questions = [

"Why did Steve Jobs's biological mother decide to put him up for adoption?",

"How did Steve Jobs's calligraphy class at Reed College impact his future work?",

"What event catalyzed the creation of Pixar and Steve Jobs's return to Apple?"

]

# Ground truths

ground_truths = [

"She was a young, unwed college graduate who wanted her child to be raised by college graduates.",

"The knowledge of typography he gained was later used to design the Macintosh computer with high-quality typography, influencing the industry.",

"Being fired from Apple led to the founding of NeXT and Pixar, and eventually to his return to Apple after NeXT was acquired."

]

answers = []

contexts = []

for query in questions:

# Append LLM output results

answers.append(rag_chain.invoke(query))

# Append retrieval results

contexts.append([docs.page_content for docs in retriever.get_relevant_documents(query)])

# Construct the evaluation dataset

dataset = Dataset.from_dict({

"question": questions,

"contexts": contexts,

"answer": answers,

"ground_truth": ground_truths

})Important Considerations

The design of the evaluation questions directly impacts the results. For instance, if the questions are not clear or specific enough, the LLM may struggle to understand the intent behind the questions. Similarly, if the questions do not cover the key metrics we wish to measure, the evaluation results may be inaccurate.

To facilitate the design of questions, RAGAs provides a method for automatically generating the evaluation dataset.

python

from langchain.document_loaders import TextLoader

from ragas.testset.generator import TestsetGenerator

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

loader = TextLoader('./SteveJobsSpeech.txt')

documents = loader.load()

generator_llm = ChatOpenAI()

critic_llm = ChatOpenAI()

embeddings = OpenAIEmbeddings()

generator = TestsetGenerator.from_langchain(

generator_llm,

critic_llm,

embeddings

)

testset = generator.generate_with_langchain_docs(documents, test_size=8)

df = testset.to_pandas()

# Save the dataset to a CSV file

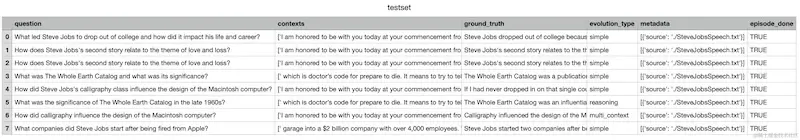

df.to_csv('testset.csv')In the code above, we have created 8 questions (test_size=8). The contents of testset.csv will look something like this:

Typically, we can assess whether the generated questions meet the testing requirements, and then extract the question and ground_truth columns. Following the previous steps, we can use our RAG system and retriever to generate the corresponding answer and context.

RAGAs Evaluation Metrics

In this section, we will explore the various metrics used to evaluate the retriever component, generator component, and overall performance of a RAG system in RAGAs.

Retriever Metrics

Context Precision

Context precision assesses whether the relevant information is correctly prioritized in the retrieval results. This metric is calculated using the question, ground truth, and contexts through three steps:

- For each retrieved context, determine if it is related to the given question's ground truth, assigning a score of 1 for relevant and 0 for irrelevant.

- Compute precision@k for each context, where

kis the rank of the current context. This only considers the current context and all preceding contexts.

- Formula:

- Average the precision values to get the overall context precision score:

- Formula:

python

from ragas import evaluate

from ragas.metrics import context_precision

result = evaluate(

dataset=dataset,

metrics=[context_precision]

)

df = result.to_pandas()

df.to_csv('context_precision.csv')Context Recall

Context recall measures how much of the ground truth content can be found in the retrieved contexts, with values ranging from 0 to 1 (higher values indicate more comprehensive results).

Steps for calculation:

- Split the ground truth into

nindividual sentences. - Check how many sentences

kcan be found in the retrieved contexts. - Compute the final result as

k/n.

python

from ragas import evaluate

from ragas.metrics import context_recall

result = evaluate(

dataset=dataset,

metrics=[context_recall]

)

df = result.to_pandas()

df.to_csv('context_recall.csv')Generator Metrics

Faithfulness

Faithfulness measures the consistency of the generated answer with the provided context. An answer is deemed faithful if all its information can be derived from the context.

Calculation method is similar to context recall:

- Split the generated answer into

nsentences. - Check how many sentences

kcan be found in the context. - Final score is

k/n.

python

from ragas import evaluate

from ragas.metrics import faithfulness

result = evaluate(

dataset=dataset,

metrics=[faithfulness]

)

df = result.to_pandas()

df.to_csv('faithfulness.csv')Answer Relevancy

Answer relevancy evaluates how relevant the generated answer is to the question. A lower score indicates incomplete or redundant information, while a higher score indicates a high degree of relevance.

This metric is computed by generating multiple questions from the answer and calculating the average cosine similarity to the original question.

python

from ragas import evaluate

from ragas.metrics import answer_relevancy

result = evaluate(

dataset=dataset,

metrics=[answer_relevancy]

)

df = result.to_pandas()

df.to_csv('answer_relevancy.csv')Overall RAG Metrics

Answer Similarity

Answer similarity assesses the semantic similarity between the generated answer and the ground truth. Scores range from 0 to 1, with higher scores indicating better alignment.

RAGAs uses the same embedding model to convert the answer and ground truth into vectors and computes the cosine similarity.

python

from ragas import evaluate

from ragas.metrics import answer_similarity

result = evaluate(

dataset=dataset,

metrics=[answer_similarity]

)

df = result.to_pandas()

df.to_csv('answer_similarity.csv')Answer Correctness

Answer correctness measures how closely the generated answer aligns with the true facts. It combines semantic similarity and factual similarity.

- Semantic similarity is calculated using the method described above.

- Factual similarity assesses the overlap of facts:

- TP (True Positive): Facts present in both answer and ground truth.

- FP (False Positive): Facts present in answer but not in ground truth.

- FN (False Negative): Facts present in ground truth but not in answer.

F1 score is calculated using the formula:

After obtaining both scores, RAGAs combines them using a weighted average (default: factual similarity weight = 0.75, semantic similarity weight = 0.25).

python

from ragas import evaluate

from ragas.metrics import answer_correctness

result = evaluate(

dataset=dataset,

metrics=[answer_correctness]

)

df = result.to_pandas()

df.to_csv('answer_correctness.csv')These metrics collectively provide a comprehensive framework for evaluating the performance of RAG systems, enabling further optimizations and improvements.

Summary

Before deploying a RAG system, it is crucial to evaluate it across multiple dimensions to ensure optimal performance through continuous iteration. Evaluations should be conducted at both the component and overall system levels, with component-level assessments focusing on the retriever and generator.

The RAGAs framework is specifically designed for assessing RAG systems, leveraging LLMs to automate scoring and expedite the optimization process. RAGAs offers various evaluation metrics: at the component level, we typically use context_precision and context_recall for the retriever, and faithfulness and answer_relevancy for the generator. For overall performance, answer_similarity and answer_correctness serve as key indicators.

The design of the evaluation test dataset significantly impacts the accuracy of the final assessment results. Therefore, it's essential to design evaluation questions thoughtfully and comprehensively. RAGAs also provides automated methods for creating test datasets, which can then be refined for further optimization.