Appearance

Data Embedding and Vector Storage

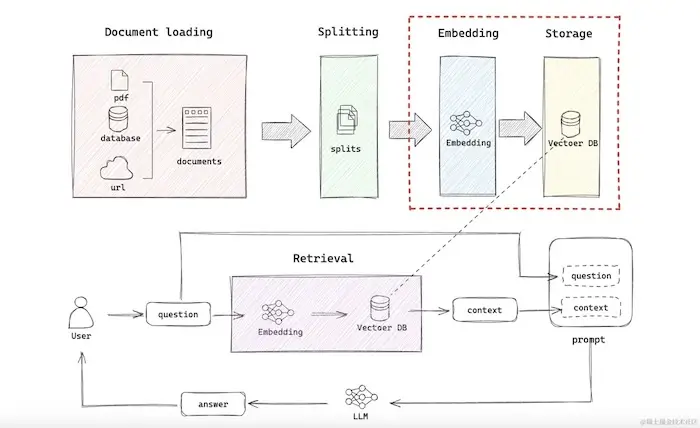

In the previous two sections, we learned about document loading and splitting strategies for building RAG applications. To read different types and sources of external data, various document loaders are required. Once the data is loaded into Document objects that LangChain can recognize, we often need to split the original documents into smaller chunks for easier processing. This is especially important since original documents can be quite large, such as PDFs that may contain dozens or even hundreds of pages.

After splitting the documents into manageable chunks, each chunk will be embedded into vectors and stored in a vector database for later retrieval. Today, we will cover both text embedding and vector storage in detail.

Quick Introduction to Embedding Technology

Basic Principles of Embedding Technology

Let's briefly introduce some fundamental concepts of embedding technology, which will help you understand the selection and evaluation criteria discussed later.

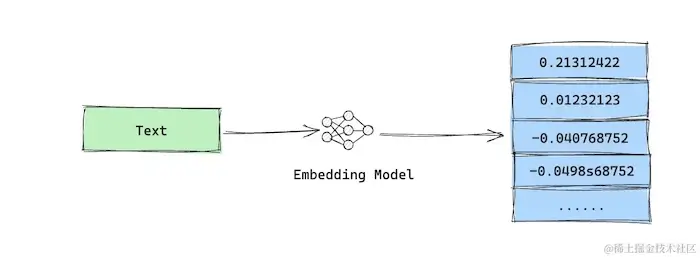

Embedding technology is a key technique in natural language processing (NLP) that converts input content into multi-dimensional vectors. It analyzes and decomposes the input into various dimensions and represents these as floating-point numbers. Ultimately, these floating-point arrays form a set of multi-dimensional vectors.

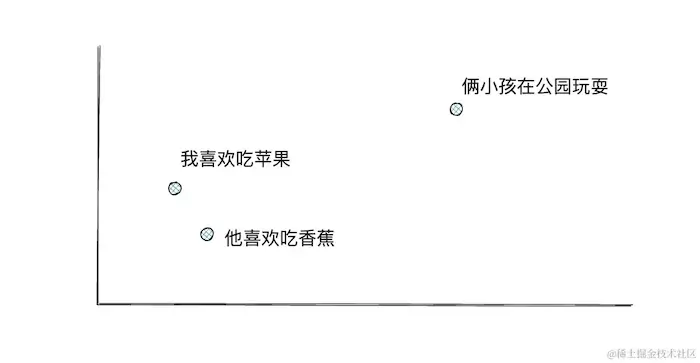

Words that are semantically similar have vectors that are close together in space. By comparing the distances between the vectors of different texts, computers can understand the relevance between those texts.

For example, consider the following three sentences:

- 我喜欢吃苹果 (I like eating apples).

- 他喜欢吃香蕉 (He likes eating bananas).

- 俩小孩在公园玩耍 (Two children are playing in the park).

When we extract the dimensional label information from these sentences and vectorize them, the resulting vector data might look like this:

In this visualization, sentences 1 and 2 are closer together because they share a similar meaning, while sentence 3 is farther away. When we search for "他喜欢吃什么水果?" (What fruit does he like to eat?), both sentences 1 and 2 contain the attribute "水果" (fruit), but since sentence 2 describes "他" (he), it will be retrieved.

In this example, we used only two dimensions, but actual embedding models can have hundreds or thousands of dimensions. For instance, OpenAI's embedding model, text-embedding-ada-002, has 1536 dimensions, while the open-source GRITLM-7B embedding model has as many as 4096 dimensions.

More dimensions allow computers to better understand the semantics of the text, but they also require more computational resources and time for embedding and retrieval. Therefore, we must strike a balance between the accuracy of retrieval and resource consumption based on our business document data.

How to Select an Embedding Model

As AI technology rapidly evolves, so does embedding technology, with new embedding models emerging daily. With so many options available, how should we choose an embedding model?

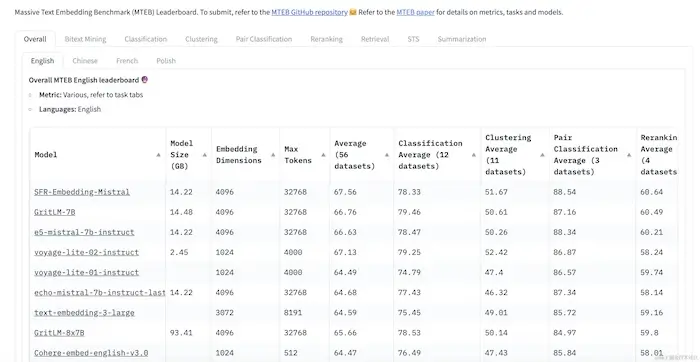

Niklas Muennighoff and others proposed the MTEB (Massive Text Embedding Benchmark), a comprehensive benchmarking tool for text embeddings. MTEB collects data from 58 public datasets covering 112 languages and has designed eight test tasks—such as text classification, clustering, retrieval, and text similarity—to evaluate the performance of text embedding models.

The MTEB code and usage guidelines are open-source on GitHub, making it easy to evaluate specific models against these datasets. Additionally, MTEB maintains a public leaderboard on Hugging Face, where we can find various text embedding models along with their MTEB performance metrics.

Key Metrics for RAG Systems

When constructing a RAG system, several key metrics are particularly relevant:

Retrieval Average: This measures the relevance of the retrieval results to the query. A higher relevance leads to a higher Retrieval Average ranking.

Embedding Dimensions: This indicates the number of dimensions in the embedding. Fewer dimensions provide faster embedding speed and higher storage efficiency, while more dimensions can capture subtle differences in the data, leading to more accurate retrieval. However, this also results in longer retrieval times and greater resource consumption. A balance must be struck between data complexity and retrieval performance.

Model Size: This specifies the computational resources required to run the model, measured in gigabytes (GB). Generally, larger models have more dimensions.

Max Tokens: This represents the maximum number of tokens that a single embedding can support. Since we have already split the data into smaller chunks, this metric is less critical for model selection. Instead, it should be considered when deciding on the document-splitting strategy.

It's important to note that the rankings on the embedding model leaderboard are based on MTEB's public datasets, which may differ from the datasets we use in practice. Therefore, a better approach is to select a few top-ranked models based on our relevant metrics and then evaluate them with our own datasets to identify the best-performing embedding model.

In constructing a RAG system, evaluating an embedding model typically involves considering two aspects:

Embedding Latency: The time required for text vectorization.

Retrieval Quality: The relevance of the retrieval results to the query.

Text Embedding in LangChain

Embeddings Class

LangChain has designed an abstract class called Embeddings specifically for interacting with text embedding models. This class includes two important methods: embed_documents and embed_query, which are used to embed multiple documents and a single query text, respectively, generating the corresponding vector data.

python

class Embeddings(ABC):

@abstractmethod

def embed_documents(self, texts: List[str]) -> List[List[float]]:

"""Embed search docs."""

@abstractmethod

def embed_query(self, text: str) -> List[float]:

"""Embed query text."""The Embeddings class provides a unified interface for integrating various embedding models into LangChain. Embedding models can create their own embedding classes by implementing the Embeddings class. LangChain already integrates dozens of embedding models, such as OpenAI and Cohere, allowing for out-of-the-box use. A detailed list of these models can be found here.

Let's take OpenAIEmbeddings as an example to learn about the implementation and usage of embedding models in LangChain.

python

class OpenAIEmbeddings(BaseModel, Embeddings):

...

model: str = "text-embedding-ada-002"

...

def embed_documents(self, texts: List[str], chunk_size: Optional[int] = 0) -> List[List[float]]:

engine = cast(str, self.deployment)

# Create embeddings for each text

return self._get_len_safe_embeddings(texts, engine=engine)

def embed_query(self, text: str) -> List[float]:

return self.embed_documents([text])[0]The OpenAIEmbeddings class defaults to using OpenAI's text-embedding-ada-002 as the embedding model. In the embed_documents method, it calls _get_len_safe_embeddings for the embedding operation, while embed_query simply calls embed_documents to embed a single text.

python

from langchain_openai import OpenAIEmbeddings

embeddings_model = OpenAIEmbeddings()

embeddings = embeddings_model.embed_documents(

[

"Hi there!",

"Oh, hello!",

"What's your name?",

"My friends call me World",

"Hello World!"

]

)

print(len(embeddings)) # Output: 5

print(len(embeddings[0])) # Output: 1536As seen in the example, embeddings_model performs embedding operations on each text. The text-embedding-ada-002 model has 1536 embedding dimensions, so each text is converted into 1536 vector values.

Consider the following use case:

python

from langchain_openai import OpenAIEmbeddings

embeddings_model = OpenAIEmbeddings()

text = "What's your name?"

embedded1 = embeddings_model.embed_documents([text])

...

embedded2 = embeddings_model.embed_documents([text])In this scenario, if the same text data is embedded twice due to business needs, the embedding model will be called repeatedly, wasting computational resources and increasing system latency.

To avoid redundant embedding computations, LangChain has designed a caching wrapper called CacheBackedEmbeddings. When a text is first embedded, the text and its embedding result are stored in a key-value format. The next time the same text needs to be embedded, the result can be retrieved directly from the cache.

Let's take a look at how CacheBackedEmbeddings is designed and implemented:

python

class CacheBackedEmbeddings(Embeddings):

def __init__(

...

underlying_embeddings: Embeddings,

document_embedding_store: BaseStore[str, List[float]],

...

) -> None:

...

def embed_documents(self, texts: List[str]) -> List[List[float]]:

# Attempt to get cached embeddings for the list of texts

vectors: List[Union[List[float], None]] = self.document_embedding_store.mget(texts)

# Filter out indices where caching failed

all_missing_indices: List[int] = [i for i, vector in enumerate(vectors) if vector is None]

for missing_indices in batch_iterate(self.batch_size, all_missing_indices):

missing_texts = [texts[i] for i in missing_indices]

# Call embedding model to embed missing texts in batch

missing_vectors = self.underlying_embeddings.embed_documents(missing_texts)

# Cache the embedding results

self.document_embedding_store.mset(list(zip(missing_texts, missing_vectors)))

# Merge embedding results into final result array

for index, updated_vector in zip(missing_indices, missing_vectors):

vectors[index] = updated_vector

return cast(List[List[float]], vectors) # Nones should have been resolved by now

def embed_query(self, text: str) -> List[float]:

# Directly call the embedding model without caching

return self.underlying_embeddings.embed_query(text)There are several key points to note in the implementation of CacheBackedEmbeddings:

Inherits the Abstract Class

Embeddings:CacheBackedEmbeddingsinherits fromEmbeddings, so it remains an embedding model class. We can easily pass in a non-cached version of the embedding model class instance as theunderlying_embeddingsvariable, allowing for a straightforward replacement with a correspondingCacheBackedEmbeddingsinstance.Supports Various Caching Storage Methods: The member variable

document_embedding_storerepresents the storage object for caching embedding results. This object is an instance ofBaseStore, which is an abstract class that defines several abstract methods, includingmget,mset, andmdelete, for managing embedding caches.

python

class BaseStore(Generic[K, V], ABC):

@abstractmethod

def mget(self, keys: Sequence[K]) -> List[Optional[V]]:

"""Get the values associated with the given keys."""

@abstractmethod

def mset(self, key_value_pairs: Sequence[Tuple[K, V]]) -> None:

"""Set the values for the given keys."""

@abstractmethod

def mdelete(self, keys: Sequence[K]) -> None:

"""Delete the given keys and their associated values."""

@abstractmethod

def yield_keys(self, *, prefix: Optional[str] = None) -> Union[Iterator[K], Iterator[str]]:

"""Get an iterator over keys that match the given prefix."""This design allows different storage mediums (e.g., MySQL, Redis, in-memory, local files) to inherit from BaseStore, enabling various storage implementations.

LangChain has already provided several common storage options for us:

python

# langchain.storage

__all__ = [

"EncoderBackedStore",

"InMemoryStore",

"InMemoryByteStore",

"LocalFileStore",

"RedisStore",

"create_lc_store",

"create_kv_docstore",

"UpstashRedisByteStore",

"UpstashRedisStore",

]- Embed Query Doesn't Use Cache: The

embed_querymethod primarily serves to embed query texts. It doesn't first attempt to fetch from the cache, as query texts tend to be diverse and unpredictable. Since user queries can vary widely and the same question might be phrased differently, caching the embedding results for queries could diminish query efficiency.

Now, let's demonstrate the use of CacheBackedEmbeddings and observe its effects:

python

import time

from langchain_openai import OpenAIEmbeddings

from langchain.embeddings import CacheBackedEmbeddings

from langchain.storage import LocalFileStore

embeddings_model = OpenAIEmbeddings()

store = LocalFileStore("./cache/")

cached_embedder = CacheBackedEmbeddings.from_bytes_store(embeddings_model, store)

text = "What's your name?"

start_time = time.time()

embedded1 = cached_embedder.embed_documents([text])

embedded1_end_time = time.time()

embedded1_cost_time = embedded1_end_time - start_time

print(f"Time taken for first embedding: {embedded1_cost_time} seconds")

embedded2 = cached_embedder.embed_documents([text])

print(f"Time taken for second embedding: {time.time() - embedded1_end_time} seconds")Output example:

Time taken for first embedding: 0.6359801292419434 seconds

Time taken for second embedding: 0.0006630420684814453 secondsThe LocalFileStore is a caching storage class implemented by LangChain based on local files. As demonstrated, using CacheBackedEmbeddings significantly improves the efficiency of the second embedding.

Text Embeddings in LangChain

LangChain has designed an abstract class called Embeddings, which is specifically used to interact with text embedding models. This class includes two important methods: embed_documents and embed_query. These methods are used for embedding multiple documents and a single query text, respectively, generating the corresponding vector data.

python

class Embeddings(ABC):

@abstractmethod

def embed_documents(self, texts: List[str]) -> List[List[float]]:

"""Embed search docs."""

@abstractmethod

def embed_query(self, text: str) -> List[float]:

"""Embed query text."""The Embeddings class provides a unified interface for integrating various embedding models into LangChain. By implementing the Embeddings class, embedding models can create their own embedding classes. LangChain already integrates dozens of embedding models, such as OpenAI and Cohere, which can be used out of the box. You can click here for the specific list.

Let's take OpenAIEmbeddings as an example to explore how embedding models are implemented and used in LangChain.

python

class OpenAIEmbeddings(BaseModel, Embeddings):

...

model: str = "text-embedding-ada-002"

...

def embed_documents(

self, texts: List[str], chunk_size: Optional[int] = 0

) -> List[List[float]]:

# self.deployment = self.model

engine = cast(str, self.deployment)

# Create embeddings for each text

return self._get_len_safe_embeddings(texts, engine=engine)

def embed_query(self, text: str) -> List[float]:

return self.embed_documents([text])[0]

...OpenAIEmbeddings defaults to using OpenAI's text-embedding-ada-002 as the embedding model. The embed_documents method calls _get_len_safe_embeddings to perform the embedding operation, while embed_query simply calls embed_documents to embed a single text.

python

from langchain_openai import OpenAIEmbeddings

embeddings_model = OpenAIEmbeddings()

embeddings = embeddings_model.embed_documents(

[

"Hi there!",

"Oh, hello!",

"What's your name?",

"My friends call me World",

"Hello World!"

]

)

print(len(embeddings)) # 5

print(len(embeddings[0])) # 1536As seen, embeddings_model performed the embedding operation on each text. The text-embedding-ada-002 model has 1536 embedding dimensions, so each text is ultimately converted into 1536 vector values.

Consider the following scenario:

python

from langchain_openai import OpenAIEmbeddings

embeddings_model = OpenAIEmbeddings()

text = "What's your name?"

embedded1 = embeddings_model.embed_documents([text])

...

embedded2 = embeddings_model.embed_documents([text])In cases where the same text is embedded twice due to certain business needs, it leads to redundant calls to the embedding model, wasting computational resources and increasing system latency.

To avoid redundant embedding computations, LangChain has designed a caching wrapper class called CacheBackedEmbeddings. After the first call to the model for embedding a text, it stores the text and its embedding result in a key-value format. When the text needs to be embedded again, it can be directly retrieved from the cache.

Here’s how CacheBackedEmbeddings is designed and implemented:

python

class CacheBackedEmbeddings(Embeddings):

def __init__(

...

underlying_embeddings: Embeddings,

document_embedding_store: BaseStore[str, List[float]],

...

) -> None:

...

def embed_documents(self, texts: List[str]) -> List[List[float]]:

# Try to get embeddings from the cache for the texts list

vectors: List[Union[List[float], None]] =

self.document_embedding_store.mget(

texts

)

# Filter out indices of texts that were not found in the cache

all_missing_indices: List[int] = [

i for i, vector in enumerate(vectors) if vector is None

]

for missing_indices in batch_iterate(self.batch_size, all_missing_indices):

missing_texts = [texts[i] for i in missing_indices]

# Call the embedding model to perform batch embedding

missing_vectors = self.underlying_embeddings.embed_documents(missing_texts)

# Cache the embedding results

self.document_embedding_store.mset(

list(zip(missing_texts, missing_vectors))

)

# Merge embedding results into the final result array

for index, updated_vector in zip(missing_indices, missing_vectors):

vectors[index] = updated_vector

return cast(

List[List[float]], vectors

) # Nones should have been resolved by now

def embed_query(self, text: str) -> List[float]:

# Directly call the embedding model without fetching from cache

return self.underlying_embeddings.embed_query(text)In the implementation of CacheBackedEmbeddings, there are a few points to note:

Inherit from the abstract class Embeddings:

CacheBackedEmbeddingsinherits fromEmbeddings, so it still appears as an embedding model class. We can easily pass an instance of a non-cached embedding model class into theunderlying_embeddingsvariable, replacing it with the correspondingCacheBackedEmbeddingsinstance.Support for multiple caching storage methods: The member variable

document_embedding_storerepresents the storage object used for caching embedding results. This object is an instance ofBaseStore, which is an abstract class designed with several abstract methods, such asmget,mset, andmdelete, for managing embedding cache.

python

class BaseStore(Generic[K, V], ABC):

@abstractmethod

def mget(self, keys: Sequence[K]) -> List[Optional[V]]:

"""Get the values associated with the given keys."""

@abstractmethod

def mset(self, key_value_pairs: Sequence[Tuple[K, V]]) -> None:

"""Set the values for the given keys."""

@abstractmethod

def mdelete(self, keys: Sequence[K]) -> None:

"""Delete the given keys and their associated values."""

@abstractmethod

def yield_keys(

self, *, prefix: Optional[str] = None

) -> Union[Iterator[K], Iterator[str]]:

"""Get an iterator over keys that match the given prefix."""This way, different storage mediums (like MySQL, Redis, in-memory, local files, etc.) can inherit from BaseStore to implement different storage methods.

Of course, LangChain has already implemented several common storage methods for us:

python

# langchain.storage

__all__ = [

"EncoderBackedStore",

"InMemoryStore",

"InMemoryByteStore",

"LocalFileStore",

"RedisStore",

"create_lc_store",

"create_kv_docstore",

"UpstashRedisByteStore",

"UpstashRedisStore",

]- The

embed_querymethod does not use the cache, directly calling the embedding model: Theembed_querymethod is primarily used for embedding query texts. It does not first attempt to retrieve from the cache, mainly considering that query texts can be diverse and unpredictable. Users' queries can vary significantly, and caching their embedding results may not be meaningful, which could instead hinder query efficiency.

Now, let's demonstrate the use of CacheBackedEmbeddings and observe its effect:

python

import time

from langchain_openai import OpenAIEmbeddings

from langchain.embeddings import CacheBackedEmbeddings

from langchain.storage import LocalFileStore

embeddings_model = OpenAIEmbeddings()

store = LocalFileStore("./cache/")

cached_embedder = CacheBackedEmbeddings.from_bytes_store(embeddings_model, store)

text = "What's your name?"

start_time = time.time()

embedded1 = cached_embedder.embed_documents([text])

embedded1_end_time = time.time()

embedded1_cost_time = embedded1_end_time - start_time

print(f"Time taken for first embedding: {embedded1_cost_time} seconds")

embedded2 = cached_embedder.embed_documents([text])

print(f"Time taken for second embedding: {time.time() - embedded1_end_time} seconds")This demonstrates the performance improvement in the second embedding after utilizing CacheBackedEmbeddings.

Vector Storage

After obtaining the corresponding vectors from document blocks through embeddings, the next step is to store these vectors in a database for future retrieval.

There are many types of vector databases, such as Chroma, Faiss, and Pinecone. LangChain integrates various common vector databases, similarly designed with an abstract class VectorStore that provides a unified interface, while specific implementations are handled by their respective databases.

Let's introduce some common methods of VectorStore.

python

class VectorStore(ABC):

# Append the original text list as vectors to the database

@abstractmethod

def add_texts(

self,

texts: Iterable[str],

metadatas: Optional[List[dict]] = None,

**kwargs: Any,

) -> List[str]:

# Delete specified texts from the database

def delete(self, ids: Optional[List[str]] = None, **kwargs: Any) -> Optional[bool]:

# Append the list of document objects as vectors

to the database

def add_documents(self, documents: List[Document], **kwargs: Any) -> List[str]:

texts = [doc.page_content for doc in documents]

metadatas = [doc.metadata for doc in documents]

return self.add_texts(texts, metadatas, **kwargs)

# Query function, supporting similarity search and maximum marginal relevance search

def search(self, query: str, search_type: str, **kwargs: Any) -> List[Document]:

"""Return docs most similar to query using specified search type."""

if search_type == "similarity":

return self.similarity_search(query, **kwargs)

elif search_type == "mmr":

return self.max_marginal_relevance_search(query, **kwargs)

else:

raise ValueError(

f"search_type of {search_type} not allowed. Expected "

"search_type to be 'similarity' or 'mmr'."

)

# Store the original text list as vectors in the database

@classmethod

@abstractmethod

def from_texts(

cls: Type[VST],

texts: List[str],

embedding: Embeddings,

metadatas: Optional[List[dict]] = None,

**kwargs: Any,

) -> VST:

# Store the list of document objects as vectors in the database

@classmethod

def from_documents(

cls: Type[VST],

documents: List[Document],

embedding: Embeddings,

**kwargs: Any,

) -> VST:

texts = [d.page_content for d in documents]

metadatas = [d.metadata for d in documents]

return cls.from_texts(texts, embedding, metadatas=metadatas, **kwargs)From the methods above, we can see that VectorStore does not directly accept vector values during storage; instead, it receives the original text or Document objects. Therefore, the vector databases introduced earlier must implement their own similarity_search and max_marginal_relevance_search for query support, delete for deletion support, and add_texts and from_texts for data storage support. The methods add_documents and from_documents are designed for easy integration with the document loaders and splitters that generate Document objects, ultimately calling add_texts and from_texts.

We'll detail the differences and use cases between similarity_search and max_marginal_relevance_search in the next section.

Chroma Installation and Usage

Now, let's take Chroma as an example to explore the usage of vector databases in LangChain.

Chroma is an open-source embedded vector database that is simple to use and fast in performance, storing data on the local disk during runtime. Installing Chroma is straightforward; you can install it directly via pip:

bash

pip install chromadbChroma provides common CRUD database operation APIs, and LangChain has developed the VectorStore implementation of Chroma based on these interfaces.

python

from langchain_openai import OpenAIEmbeddings

from langchain_community.vectorstores import Chroma

from langchain_community.document_loaders import PyPDFLoader

import bs4

from langchain_community.document_loaders import WebBaseLoader

from langchain.text_splitter import RecursiveCharacterTextSplitter

loader = WebBaseLoader(

web_path="https://www.gov.cn/jrzg/2013-10/25/content_2515601.htm",

bs_kwargs=dict(parse_only=bs4.SoupStrainer(

class_=("p1")

))

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

persist_directory = "db/chroma"

vectorstore = Chroma.from_documents(

documents=splits,

embedding=OpenAIEmbeddings(),

persist_directory=persist_directory

)In this example, we used content from the "Consumer Rights Protection Law" for demonstration.

- persist_directory: This is the storage path for data. If the specified path does not exist, it will be automatically created. By default, it is empty, meaning the data will only be loaded into memory without persistence.

After running the above code, you should see a folder named db/chroma in your script's directory, which contains the relevant files for the Chroma database.

Avoiding Duplicate Storage

Consider the following code scenario:

python

vectorstore = Chroma.from_documents(

documents=splits,

embedding=OpenAIEmbeddings(),

persist_directory=persist_directory

)

vectorstore = Chroma.from_documents(

documents=splits,

embedding=OpenAIEmbeddings(),

persist_directory=persist_directory

)Executing the same storage operation on the same list of documents, splits, will lead to duplicate entries in the database. This not only wastes storage space but can also affect subsequent retrieval, as identical document blocks may be retrieved.

Therefore, avoiding duplicate data entries in a vector database is an important consideration. There are many ways to prevent duplicate storage; for example, we can maintain a hash filter, generating a hash value for each document block and checking if the same hash value already exists in the filter before insertion. If it matches, we will not insert it again.

Chroma allows adding/updating data with a document ID parameter, enabling us to generate a unique ID for each document block beforehand, thus avoiding duplicate entries.

python

class Chroma(VectorStore):

@classmethod

def from_documents(

...

ids: Optional[List[str]] = None,

...

)Similarity Search

After inserting data, we can directly use the VectorStore.similarity_search method to perform a similarity search and find the documents most relevant to the query text.

python

query = "What obligations do operators have?"

docs_resp = vectorstore.similarity_search(query=query, k=2)

print(len(docs_resp)) # 2

print(docs_resp[0].page_content)plaintext

"""

Chapter Three: Obligations of Operators

Article 16: Operators shall provide goods or services to consumers in accordance with the provisions of this law and other relevant laws and regulations.

Operators and consumers shall perform their obligations according to agreements, but the agreements shall not violate the provisions of laws and regulations.

Operators shall uphold social morality, conduct honest business, and protect consumers' legitimate rights and interests; they shall not set unfair or unreasonable transaction conditions or compel transactions.

Article 17: Operators shall listen to consumers' opinions on the goods or services they provide and accept consumer supervision.

...

"""As demonstrated, the similarity_search method successfully retrieved the two documents most relevant to the query about "operator obligations."

Summary

Embedding technology allows for converting text into multi-dimensional vectors, making semantically similar texts closer in vector space. By calculating the distance between vectors, we can assess the relevance between texts.

The latest embedding models and their evaluation metrics can be found on Hugging Face's MTEB leaderboard. You can choose models with high intention indicators based on your requirements and then test them further with your datasets to select the optimal model.

LangChain provides the Embeddings abstract class for integrating various text embedding models. To avoid redundant embedding calculations, the CacheBackedEmbeddings class caches embedding results to improve efficiency. When embedding the same text again, the result can be directly retrieved from the cache without recalculating.

The vectors obtained from embedding document blocks need to be stored in a vector database. LangChain integrates various vector databases and provides a unified management interface through the VectorStore abstract class. When using vector databases, it is essential to avoid duplicate storage by generating unique document IDs for document blocks to ensure data uniqueness.