Appearance

LLM

At the end of 2022, OpenAI launched ChatGPT, igniting a surge in large language AI. People were amazed by its powerful understanding and content generation capabilities, prompting various industries to explore how AI can empower their businesses. The realization of AI applications has become the "One Piece" that everyone is chasing.

Of course, for the average worker, the strength of AI can also evoke a sense of crisis. Tasks that once took a day can now be completed by AI in a minute, often with better results. Life is already challenging, and now AI is here to take our "jobs"...

For developers, however, there are more opportunities. We can harness the power of large language models to develop a variety of previously unimaginable AI+ applications. Now, please quickly board our AI future ship—it has set sail!

This section will cover the development history of large language models, their use cases, and some related foundational knowledge.

History of Large LLM

Let’s first revisit the origins and evolution of large language models, examining how each stage has laid the groundwork for more advanced models. The development timeline dates back to the mid-20th century...

Phase 1: HMM

The Hidden Markov Model (HMM) emerged in the mid-20th century and became popular in the 1970s. This model encodes the grammatical structure of sentences to predict new words. HMM only considers the most recent input when predicting new content. For example, given the input "I went to the store and," it must predict the next content but only sees the last token "and." With so little information, satisfactory predictions are unlikely.

Phase 2: N-gram

In the 1990s, the N-gram model gained popularity. Unlike HMM, N-gram can accept multiple tokens as input. For the previous example, N-gram can predict based on "the store and," resulting in better performance. However, the limited number of tokens still restricts the prediction quality.

Phase 3: RNN

Around 2000, Recurrent Neural Networks (RNNs) became particularly popular because they can handle a larger number of input tokens. Notably, Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks became widely used, producing relatively good results. However, RNNs exhibit instability when processing long text sequences.

Phase 4: Transformer

In 2017, Google released the Transformer model, allowing a significant increase in the number of input tokens and eliminating the instability issues associated with RNNs. Utilizing an attention mechanism, the model learns to assign different attention levels to various parts of the input, focusing more on information crucial to the task. For instance, with the input "I went to the store and," the model might predict "bought," requiring it to infer the past tense based on "went."

Phase 5: GPT

GPT, or Generative Pre-trained Transformer, is a specific model based on the Transformer architecture. It undergoes unsupervised pre-training on a large corpus and is then fine-tuned for specific tasks, making it suitable for various scenarios, particularly in text generation, dialogue systems, and question answering. This GPT is the large language model we will study in this course.

Use Cases for Large Language Models

After extensive pre-training and fine-tuning, GPT can excel across industries. Rather than asking what it can do, it’s better to consider what we want it to do. In this subsection, we’ll outline some typical application scenarios, giving you a glimpse of the potential and allure of large language models.

Intelligent Customer Service

Leveraging the powerful semantic understanding of large language models along with retrieval-augmented generation (RAG) technology, we can easily train intelligent customer service systems based on local knowledge bases. In the future, the friendly "亲" from Taobao assistants might be replaced by AI.

Intelligent Education

AI in education is another significant direction for AI applications. Imagine a highly professional and comprehensive tutor that can adjust learning plans in real-time based on a student's performance, providing personalized educational support and helping achieve true educational equity.

Programming Assistance

In the programming realm, efficiency gains are evident. Those who have used ChatGPT know the experience well. Previously, when faced with syntax issues or unclear requirements, we would spend half an hour searching Google for answers among unreliable resources. Now, conversing with ChatGPT makes coding as easy as chatting. Specialized AI coding assistants like GitHub Copilot can analyze code, comments, and context, automatically generating high-quality code.

Text Summarization

GPT is also a powerful tool for text summarization, quickly grasping the theme and key information of an article, distilling the essence, and generating concise, accurate summaries. Many AI tools, like ChatPdf and ChatDoc, allow users to upload files and ask any questions regarding the content, enabling AI to summarize and analyze effectively.

Data Analysis

With GPT's natural language processing capabilities, we can interpret and understand data more easily, improving decision-making and efficiency in the data analysis process. For instance, analyzing complex charts used to take a long time to identify trends and changes; GPT can swiftly analyze correlations and produce simple, understandable reports to uncover useful information.

Moreover, combining GPT with traditional data analysis tools—such as GPT + SQL—means analysts no longer need to write complex, error-prone SQL queries. Instead, they can pose questions in natural language, like "Which product has seen the fastest sales growth in recent months?" GPT can automatically convert this into an SQL query, retrieve results, and even generate charts using reporting tools.

Foundational Knowledge of Large Language Models

Having explored the application scenarios of large language models, let’s discuss some foundational knowledge before diving into AI application development.

What Are LLM, GPT, and ChatGPT?

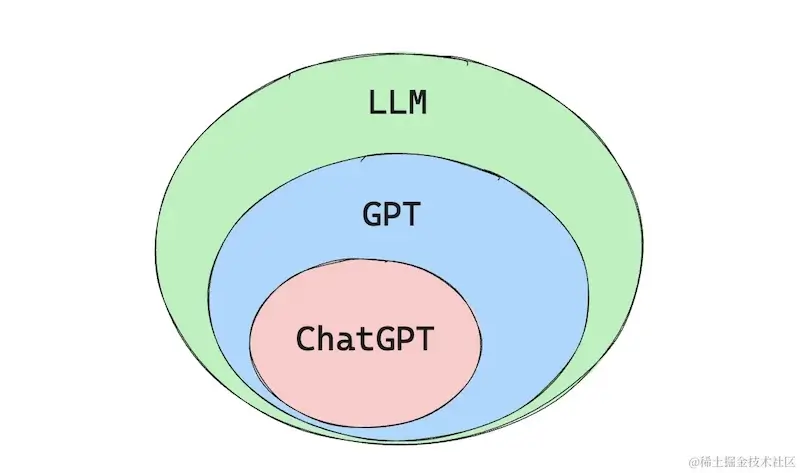

First, we need to clarify the differences and relationships among LLM, GPT, and ChatGPT.

LLM: Large Language Model refers broadly to large language models, which include GPT, ChatGPT, and other similar models. The AI application development discussed in this course can also be referred to as LLM application development.

GPT: Generative Pre-trained Transformer is a series of models developed by OpenAI, based on the Transformer architecture. It learns from large-scale data through pre-training and is then fine-tuned for specific tasks, making it suitable for various natural language processing tasks.

ChatGPT: A specific application of the GPT series, optimized through fine-tuning to resemble human conversation more closely and engage in more effective dialogues.

The relationship among these three is illustrated below:

Prompts: The Art of Interacting with Large Language Models

Prompts are the text input given to large language models and serve as commands that drive the models' responses.

Traditional programming requires expected input to yield output and may return errors if adjustments are necessary. In contrast, large language models respond to any input prompts like a person. Thus, the choice of prompt significantly influences the quality of the model's responses. Consider the following examples:

- "How to find a good job?"

- "I am a new graduate student in biotechnology. How should I find a job related to my major? Could you provide some job search websites and tips?"

The first prompt provides very little information for the model, resulting in a lower quality answer compared to the second prompt. When selecting prompts, we need to iterate and optimize, providing specific and clear instructions to guide the model in generating desired results. In later courses, we will introduce common prompt techniques to help you write high-quality prompts.

In AI application development, prompts are also used to limit and standardize the model's outputs, such as restricting the model to respond only to programming-related questions or ensuring results are returned in markdown format.

Tokens: The Basic Unit of Measurement for Large Language Models

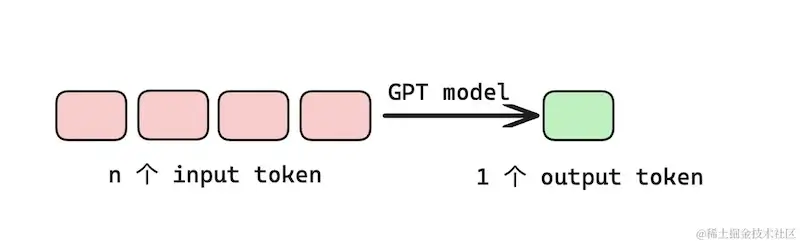

In GPT, the basic measurement unit is the token. The fundamental working principle of GPT is: input n tokens, and the GPT model outputs 1 token. The process is illustrated below:

Tokens are also the billing unit for large language models, with different costs for input and output tokens. For example, OpenAI's GPT-3.5 charges $0.0015 for every 1,000 input tokens and $0.002 for every 1,000 output tokens; GPT-4, due to its superior performance, is approximately 30 times more expensive than GPT-3.5.

A token may not always correspond to a complete word; it can be a short word or a part of a word. GPT splits text into multiple tokens according to specific algorithms. For common words and short terms, each is typically treated as one token, while less common or longer words may be divided into several tokens.

OpenAI provides a Tokenizer page that visualizes how text is tokenized, as shown below:

Additionally, a tokenization Python library is available at: tiktoken .

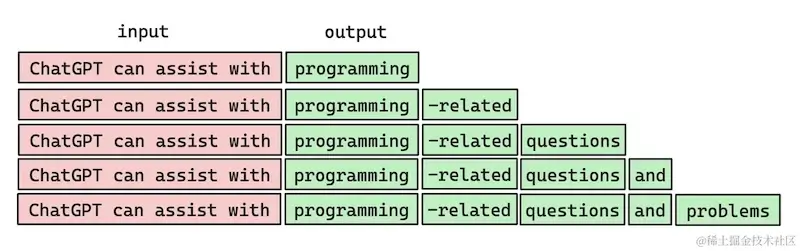

Expanded Output

As mentioned earlier, the basic principle of GPT is to input n tokens and output 1 token. However, users of ChatGPT know that it outputs a string of text after inputting a question. This is achieved by generating 1 output token for every n input tokens, combining the output with the input for the next iteration until the full text is produced.

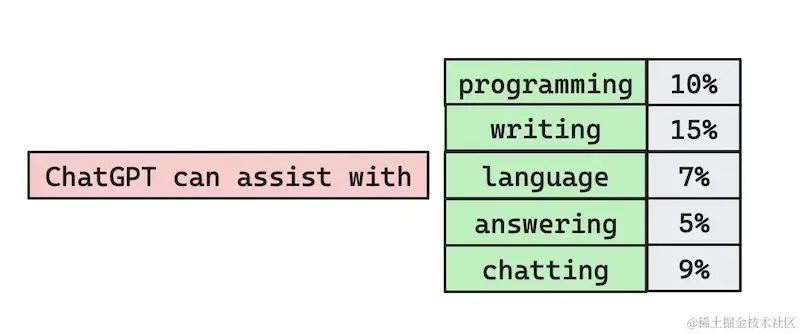

Output Randomness

When using ChatGPT, users may notice variability in responses. Asking the same question twice might yield different answers due to GPT generating a list of tokens with varying probabilities during each iteration. Normally, one might expect the highest probability token to be chosen, but consistently doing so can result in bland text. Introducing a temperature parameter (ranging from 0 to 1) allows for occasional selection of lower-ranked tokens, enhancing creativity. A temperature setting around 0.8 generally produces good results, adding a level of randomness to outputs.

Token Count Limitations

Large language models impose limitations on token counts for several reasons: the model's parameter scale correlates with token numbers, longer texts consume more memory, and efficiency declines with increased input length. Different GPT models have varying limits, with GPT-3 allowing 4096 tokens and GPT-4 accommodating up to 128,000 tokens, depending on the version. This limit refers to the total of input and output tokens, necessitating careful management of available tokens for output.

Fine-tuning: Training Your Own Exclusive Large Language Model

Fine-tuning allows users to tailor large language models to specific contexts. This process resembles onboarding a new employee who learns a company's specific processes. Fine-tuning GPT is accessible via API, requiring only the necessary training data. ChatGPT also undergoes continuous fine-tuning through user interactions, optimizing responses based on feedback—a technique known as Reinforcement Learning from Human Feedback (RLHF).

Summary

In summary, GPT is a Transformer-based model trained on extensive corpora, adaptable to various tasks. Large language models have applications across diverse fields, with prompt quality directly affecting response accuracy. Tokens serve as the measurement and billing units for GPT, which also faces limitations on token counts. Fine-tuning enables the acquisition of customized language models at low costs.