Appearance

Quick Start

LangChain is currently the most active open-source LLM application development framework, with a vibrant community and rapid iterations. As of now, it has over 75k stars, more than 2000 contributors, and over 50k projects relying on the LangChain framework, reflecting recognition of its potential and future.

In this lesson, we will delve into the necessity of using the LangChain framework for development, briefly explore its six modules, guide you through installing and quickly using LangChain, and finally introduce how to use LangServe for rapid deployment and publication of our LLM applications. The relevant mind map is as follows:

Why LangChain is Needed

Let’s start with a familiar backend development scenario: maintaining the backend payment module of a mall system. When integrating a new payment channel, we need to interface with the channel API and coordinate with the frontend on the new protocol, as illustrated below:

The above architecture is somewhat cumbersome, as the interaction between the backend and frontend merely involves the frontend calling the order API to create an order → the user pays → the backend retrieves the order status. There's no need to design a new protocol for each payment channel. Therefore, we can abstract a universal interface layer for backend ↔ frontend interaction. This optimized architecture looks as follows:

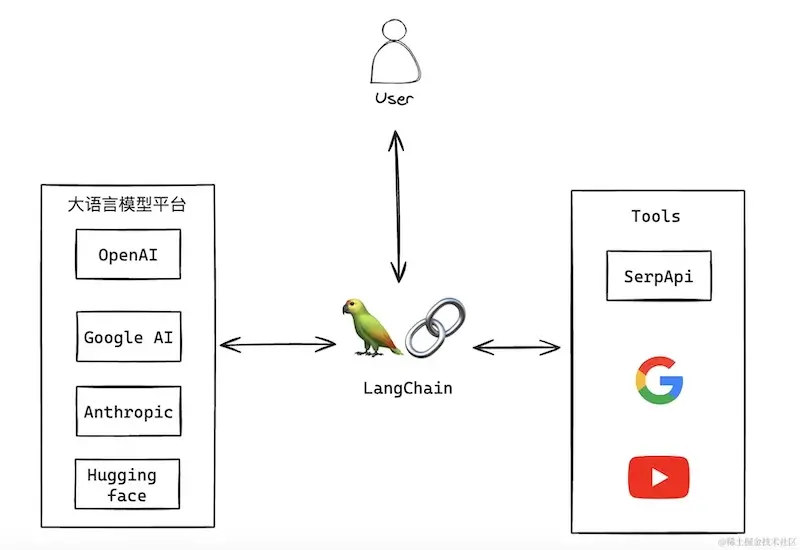

In the context of LLM application development, payment channels are analogous to various pre-trained large language models, while the payment middleware that integrates multiple channels and provides an abstract interface layer is LangChain.

LangChain integrates multiple large language models from platforms like OpenAI, Hugging Face, and Google, offering a unified calling method. We no longer need to focus on and learn the different invocation methods for each platform, reducing the cost of switching to a new large language model to just modifying a line or two of code.

Additionally, LangChain provides a series of components and tools covering data reading, data storage, model interaction, and application publishing. For instance, in data reading, LangChain supports writing various file formats such as CSV, JSON, Markdown, and PDF. If we need to extract content from a URL, LangChain offers extraction libraries for common websites like Google, YouTube, and even Bilibili. LangChain acts like a “Swiss Army knife,” allowing developers to find relevant tools for various problems encountered during development.

Furthermore, the concepts of Chains and Agents introduced by LangChain further standardize and simplify the development of complex LLM applications.

Thus, through LangChain, even a developer without expertise in AI can easily combine various large language models with external data to build functional LLM applications.

The Six Modules of LangChain

To cater to various development scenarios, LangChain divides the entire framework into six main modules. Understanding and mastering these modules can facilitate the rapid construction of scalable LLM applications.

Model IO

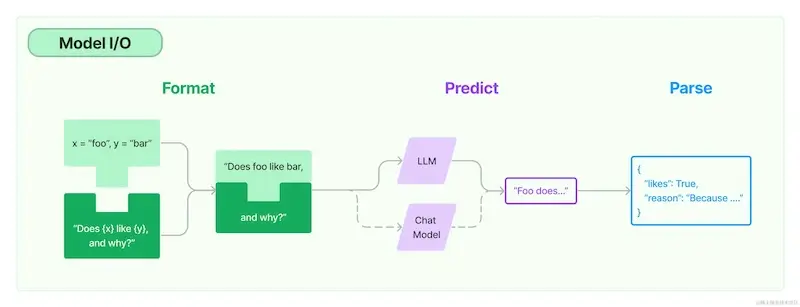

Currently, with the emergence of various large language models, LangChain classifies them into LLM Models and Chat Models, providing unified input and output interface standards, simplifying our interactions with large language models.

LangChain's prompt template feature helps manage and control model inputs. It includes multiple excellent prompt templates and a community (LangSmith-hub) for various developer input needs.

LangChain also provides output parsers to extract and format the required information from the model’s outputs.

Retrieval

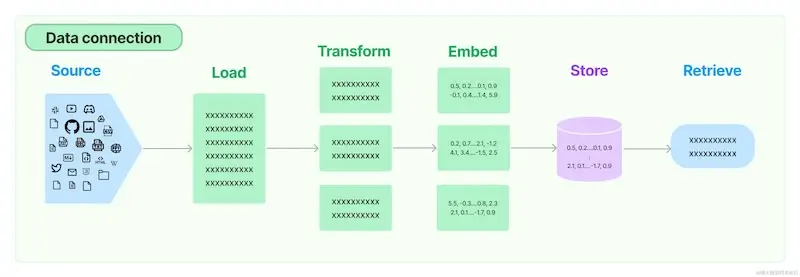

LLM applications often require access to user-specific data that is not included in the model’s original training set. To ensure accurate answers, specific data must be retrieved and passed to the LLM along with the query, a method known as Retrieval-Augmented Generation (RAG).

To achieve RAG, external data must be vectorized for storage and retrieval. LangChain integrates various processing platforms and offers a unified interface for loading, transforming, storing, and querying data.

Chain

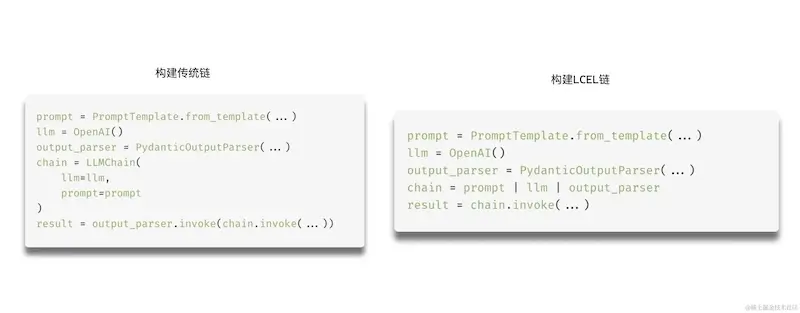

LangChain proposes using chains to construct LLM applications. The invocation of large language models, tool usage, and data processing all form part of a chain, allowing for clear and convenient LLM application construction.

The method of constructing chains in LangChain has undergone a significant change. The new version uses a LangChain Expression Language (LCEL) to create chains, connecting parts with a pipe symbol (“|”), making the construction and execution of the entire chain more intuitive. It also supports asynchronous calls and streaming outputs. The developers are continuously iterating on LCEL versions for each functional chain to replace the old chain-building methods gradually.

Memory

In our interactions with models, they do not retain any information from past interactions. For instance, if you tell the model your name in one round, it will still not know your name in the next interaction, meaning the model lacks memory functionality.

For many LLM applications, especially conversational bots, associating historical chat records with responses is a basic feature. LangChain provides various tools to add "memory" to LLM applications. These tools can be used independently or seamlessly integrated into chains.

Agent

In late 2022, Yao et al. introduced the ReAct framework to enhance LLM reasoning and action capabilities.

Building on this, LangChain created Agents. In a chain, the execution of specific components or tools is fixed; whereas in Agents, large language models are used for reasoning and decision-making, determining which actions to take and in what order.

Callback

LangChain provides a callback system that allows you to hook into various stages of the chain. This is useful for logging, monitoring, streaming, and other tasks. LangChain includes multiple built-in callback handlers, allowing us to pass processor objects to the callbacks parameter when running the chain, subscribing to events at different stages.

Using LangChain

LangChain provides two development versions: Python and JavaScript. Considering that the Python ecosystem within LangChain is more robust and that Python syntax is easier to read, it is accessible for both frontend and backend developers. Therefore, we will use the Python version for our guide.

Installing LangChain

Since its open-source release, LangChain has undergone rapid iteration. This not only reflects the popularity of the LangChain framework but also means frequent updates and iterations, some of which have compatibility issues. Additionally, all previous versions of LangChain were 0.0.x, leading developers to hesitate when upgrading versions and missing out on the new features of higher versions.

Finally, in January 2024, LangChain released version 0.1.0, indicating a stable version for production use. Future iterations of LangChain will follow these version standards:

- Updates that break the original interface design and cause compatibility issues will increment the minor version number (the second digit of the version number).

- Bug fixes and new features will increment the patch version number (the third digit of the version number).

Thus, the LangChain version we will use in this course is based on 0.1.x (currently 0.1.13), ensuring that the subsequent code examples can run successfully in practice.

According to LangChain's recommendation, we will use Python version 3.11, so please install Python 3.11 on your own.

Installing LangChain is quite simple; it can be installed directly via pip.

bash

pip install langchain==0.1.13It is important to note that although LangChain integrates various language models and related tools, the relevant dependencies are not installed by default when installing LangChain. This means we need to manually import the necessary dependencies. For instance, since we mainly use OpenAI's large language model for demonstrations in our course, we also need to install langchain-openai.

bash

pip install langchain-openaiConfiguring the Development Key

When calling large language models from third-party platforms like OpenAI or Hugging Face via API, a calling key is generally required for billing and authentication. The method of obtaining a key varies by platform; we'll take OpenAI as an example.

Obtaining the Key from Official Channels

- Open the OpenAI API key configuration backend: platform.openai.com/api-keys and follow the prompts to complete the registration and login process.

- Click the "Create new secret key" button to generate a new key.

This way, we have successfully created an official OpenAI key.

Obtaining the Key from Domestic Proxies

For certain well-known reasons, calling OpenAI's API requires a special access method, and key credit recharge often requires an overseas bank card, which poses a barrier for many users. Therefore, a number of OpenAI key proxy services have emerged domestically. We can purchase key credits from these proxy platforms and use their keys to call the API model. The proxy API generally only differs in the host, while the URL path and API parameters remain consistent with the official API.

You can Google "openai key purchase" to find related proxy services, which are generally not expensive. However, the stability of keys from proxy platforms cannot be guaranteed, and they may go offline unexpectedly. Therefore, it is advisable not to purchase too many credits at once, and to use them primarily for learning and testing; for production, it is better to find a way to purchase through official channels.

Using the Key in LangChain

In the previous step, we obtained the key and the API for calling the model (official or proxy platform). LangChain supports two methods for configuration.

Method 1: Directly Passing the Key and API During Model Initialization

python

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(openai_api_key="your_key", openai_api_base="your_api")This is the most straightforward but also the least secure method; if the code is made public, the key may be exposed.

Method 2: Using Environment Variables

bash

export OPENAI_API_KEY="your_key"

export OPENAI_API_BASE="your_api"When initializing the model object, there is no need to specify the key and API; LangChain will automatically detect and use them from the environment variables.

python

from langchain_openai import ChatOpenAI

llm = ChatOpenAI()This is the recommended approach since the key is not written in the code, preventing exposure even if the code is made public.

LLM Model vs Chat Model

When introducing LangChain's six major modules, we mentioned that LangChain categorizes large language models into LLM Model and Chat Model. In this section, we will learn the differences between these two categories and how to use them in LangChain.

Calling LLM Model in LangChain

LLM models are primarily used for text completion, text embedding, and text similarity search tasks. For example, given the input text: "Today the weather is really," the model might complete the text with: "good, with a pleasant temperature and nice sunshine, perfect for going out." These models typically accept a string as input and return a string.

Calling LLM models in LangChain is straightforward; simply specify the model name during initialization and provide a string input when running. For instance, if we want to use OpenAI's gpt-3.5-turbo-instruct model, here’s an example:

python

from langchain_openai import OpenAI

llm = OpenAI(model_name='gpt-3.5-turbo-instruct')

llm.invoke("Today the weather is really")

# > good, with a pleasant temperature and nice sunshine, perfect for going out.It is worth noting that LangChain's OpenAI class defaults to using the gpt-3.5-turbo-instruct model.

Calling Chat Model in LangChain

LLM Models are general-purpose models typically used for simple single-turn text interactions, while Chat Models are optimized for dialogue tasks, enabling better multi-turn conversations, such as those with customer service bots or virtual assistants.

The input and output of Chat Models are no longer simple strings; they consist of messages (Message) and introduce the concept of roles, where each message has a corresponding role. Generally, there are three types of roles:

user: User message. The message content is the user's input question, represented in LangChain as HumanMessage.

assistant: Assistant message. The message content is the model's response. Assistant messages can provide the model's historical answers to achieve a memory effect in the conversation. In LangChain, this is represented as AIMessage.

system: System message. This sets the background or context of the conversation, helping the model understand its role and task, improving the quality of its responses. In LangChain, this is represented as SystemMessage. For example, to let the model act as a senior developer, you can use

SystemMessage(content="You are a professional senior developer"), which helps the model give better responses during the conversation.

The Chat Model receives a list of messages as input, which can include one SystemMessage, multiple HumanMessages, and AIMessages. The last message should be a HumanMessage containing the user's expected question. LangChain will output an AIMessage as the answer to the question.

Here’s an example of calling a Chat Model in LangChain:

python

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, SystemMessage

chat = ChatOpenAI()

input_messages = [

SystemMessage(content="You're a helpful assistant"),

HumanMessage(content="1+1=?"),

]

chat.invoke(input_messages)

# > AIMessage(content ="1 + 1 equals 2.")Deploy Your LLM Application

After building an application using LangChain, the next step is to deploy it for user access. LangChain provides LangServe, which integrates with Python's FastAPI web framework, making it easy to deploy our AI services.

Building an LLM Application

Install LangServe via pip:

bash

pip install "langserve[all]"Initialize a FastAPI application:

python

app = FastAPI(

title="LangChain Server",

version="1.0",

description="A simple API server using LangChain's Runnable interfaces",

)Define the service route:

python

langserve.add_routes(

app,

llm,

path="/first_llm",

)The complete code is as follows:

python

import os

from langchain_openai import OpenAI

from fastapi import FastAPI

from langserve import add_routes

os.environ['OPENAI_API_KEY'] = 'your_openai_key'

os.environ['OPENAI_API_BASE'] = 'your_proxy_url'

llm = OpenAI()

app = FastAPI(

title="LangChain Server",

version="1.0",

description="A simple API server using LangChain's Runnable interfaces",

)

# Adding chain route

add_routes(

app,

llm,

path="/first_llm",

)

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="localhost", port=8000)By executing the above Python file, we can access our AI service via localhost:8000.

Browser Debugging

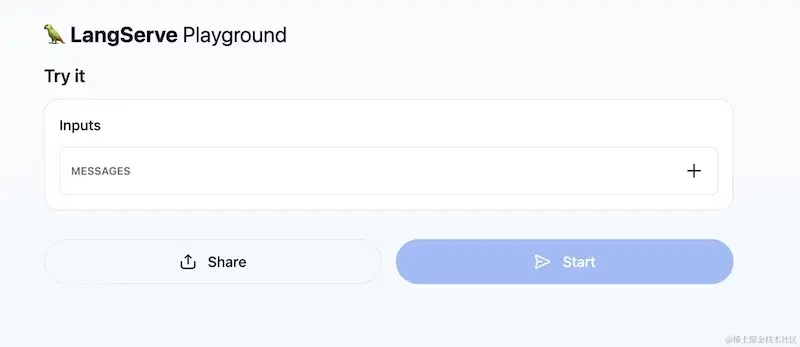

LangServe also includes a built-in UI for debugging input and output. You can access it by visiting http://localhost:8000/first_llm/playground/ in your browser.

Remote Calling of Applications

LangServe provides the RemoteRunnable class to create a client for remote calls to the server.

python

from langserve import RemoteRunnable

remote_chain = RemoteRunnable("http://localhost:8000/first_llm/")

remote_chain.invoke({"text": "tell a joke"})Summary

In conclusion, here are the key points from this article:

- LangChain integrates numerous large language models from various platforms and provides a rich set of components and tools. Through modularization and chaining, it offers an easy-to-use LLM application development scaffold for developers.

- LangChain is divided into six major modules: Model IO, Data Retrieval, Chains, Memory, Agents, and Callbacks. These modules cover all aspects of LLM application development.

- In January 2024, LangChain upgraded to version 0.1.x and standardized subsequent version management. It is advisable to use version 0.1.x for production.

- During LLM application development, it is crucial to manage keys properly to avoid leakage, and it is recommended to use environment variables for key configuration.

- LangChain categorizes large language models into LLM Models and Chat Models, each having its own method of invocation in LangChain.

- LangChain's LangServe allows for quick deployment of LLM applications, providing services via API and enabling remote invocation methods.