Appearance

Project Background

Many people have experienced buying something online that didn’t meet their expectations, only to find that the platform states “no returns without reason within 7 days.” When contacting customer service, they may refuse to process a return, citing that it was clearly marked as a non-returnable item before purchase. In such cases, customers often feel at fault and begrudgingly accept the situation.

However, according to consumer protection laws, most items can be returned without reason, with only a few exceptions. In other words, the platform's "no return" policy is an unfair term. If you file a complaint with the consumer rights hotline (12315), a customer service representative will likely contact you within half a day to process the return.

Given this context, we aim to develop a chatbot that is well-versed in consumer protection laws. If we encounter an unsatisfactory purchase, we can simply ask the bot about the refund process, and it will provide professional "legal assistance," saving us from unnecessary expenses.

The chatbot must meet two key requirements:

- It must accurately answer questions related to consumer rights.

- It should not respond to unrelated questions.

To achieve these requirements, we need to incorporate the relevant content of the "Consumer Rights Protection Law" and restrict the chatbot's responses to consumer rights topics.

Implementation Plan

In simple terms, we need to store the content of the "Consumer Rights Protection Law" in a vectorized database. When a question is asked, we first query the vector database to retrieve relevant information and then input both the question and the retrieved information into a language model (LLM) to generate the final answer.

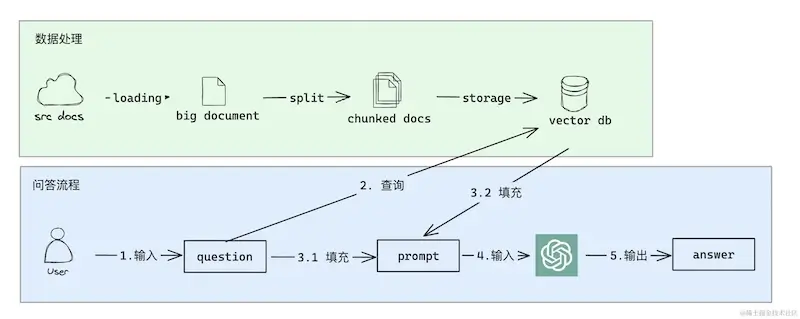

Here’s the flowchart of the implementation process:

- Loading: Load the relevant legal documents locally and convert them into a format recognizable by LangChain.

- Split: Split the original large documents into smaller chunks for easier storage.

- Storage: After vectorizing the split document chunks, store them in a vector database.

- Retrieval: Search the vector database to find and return the relevant document chunks.

- Input: Combine the user's question with the results from the database and send them to the LLM.

- Output: The LLM generates the answer.

At each step of the process, LangChain provides tools and components to help us accomplish the tasks easily.

Project Setup

To ensure that you can run the practical code locally, we’ll guide you through the project environment setup for the first practical project.

First, make sure you have Python 3.11 or higher installed on your local machine. This step is not covered here; you can search online for installation instructions.

Next, we will use pdm as the project's package management tool to manage dependencies and avoid conflicts with other Python projects. Front-end developers can think of it as similar to Node.js's npm, while back-end developers can compare it to Java's Maven or Golang's go mod.

Installing pdm

We can use pipx to install pdm. So, we need to install pipx first.

bash

pip install pipx

pipx install pdmInitializing the Project with pdm

Run pdm init to initialize a project. Note that during initialization, pdm will scan all Python versions installed on your machine, so be sure to select the correct version (≥ 3.11).

bash

# pdm init

Creating a pyproject.toml for PDM...

Please enter the Python interpreter to use

0. /opt/homebrew/bin/python3 (3.11)

1. /opt/homebrew/bin/python3.12 (3.12)

2. /usr/bin/python2 (2.7)

Please select (0):After completion, pdm generates a pyproject.toml file in the current directory with the selected information written inside.

toml

[project]

name = "consumer_bot"

version = "0.1.0"

description = "Default template for PDM package"

authors = [

{name = "huiwan_code", email = "xxx@163.com"},

]

dependencies = []

requires-python = "==3.11.*"

readme = "README.md"

license = {text = "MIT"}

[tool.pdm]

package-type = "application"Installing Project Dependencies

Use the add command in pdm to install the libraries required for this project. Below are the dependencies needed:

bash

# Example: pdm add langchain==0.1.13

langchain==0.1.13

langchain-openai>=0.0.5

bs4>=0.0.2

chromadb>=0.4.22

langchainhub>=0.1.14

langserve>=0.0.41

sse-starlette>=2.0.0Running the Project

pdm essentially creates a virtual environment in the project directory to isolate dependencies from other projects. Use the run command provided by pdm to execute scripts within this environment. For example, if there is a bot.py script in the project directory, run it with:

bash

pdm run bot.pyNow, the environment setup is complete. Next, we’ll proceed to explain the development of the chatbot.

Data Processing

Data Loading

The "Consumer Rights Protection Law" can be accessed here. LangChain provides various document loaders for different document types. We will use WebBaseLoader with the bs4 library (HTML data extraction library) to get the required content.

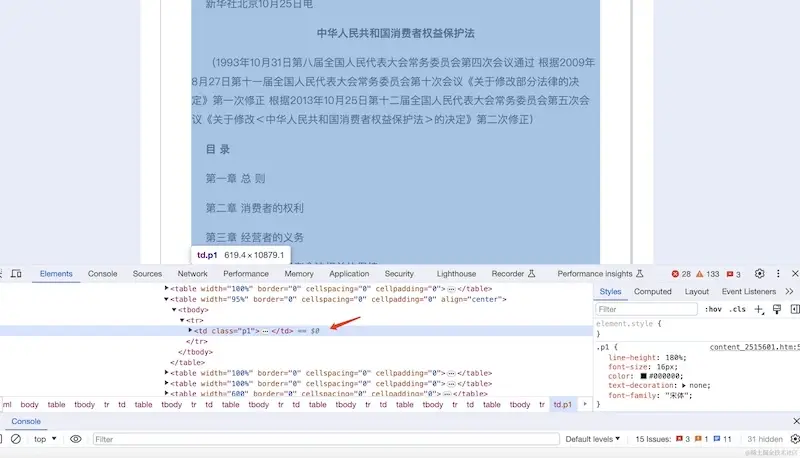

After opening the link, right-click and inspect the elements:

It shows that the content we need is under the class="p1" tag. Here’s the loading code:

python

import bs4

from langchain_community.document_loaders import WebBaseLoader

loader = WebBaseLoader(

web_path="https://www.gov.cn/jrzg/2013-10/25/content_2515601.htm",

bs_kwargs=dict(parse_only=bs4.SoupStrainer(class_=("p1")))

)

docs = loader.load()Text Splitting

To avoid exceeding the model’s token limit and to facilitate information retrieval, we split the original document into smaller chunks using RecursiveCharacterTextSplitter.

python

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)Vector Storage

We have parsed and split the document into 11 chunks. To avoid retrieving data repeatedly, we store these chunks in a vector database, which can efficiently handle similarity searches and clustering tasks.

Using the Chroma vector store and OpenAIEmbeddings, we store the vectorized data:

python

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

db = Chroma.from_documents(documents=splits, embedding=OpenAIEmbeddings(), persist_directory="./chroma_db")Here’s the complete code for data processing:

python

import bs4

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.document_loaders import WebBaseLoader

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

loader = WebBaseLoader(

web_path="https://www.gov.cn/jrzg/2013-10/25/content_2515601.htm",

bs_kwargs=dict(parse_only=bs4.SoupStrainer(class_=("p1")))

)

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

db = Chroma.from_documents(documents=splits, embedding=OpenAIEmbeddings(), persist_directory="./chroma_db")Creating a Q&A Bot

Once the data is prepared, we can start building our Q&A bot. The main process involves retrieving relevant document knowledge from the vector database based on the question, using this information as context, and passing it along with the question to the LLM to generate an answer.

Prompt Template

LLMs are driven by prompts, and the quality of the prompt greatly affects the quality of the response. Let’s revisit the requirements for the bot:

- Answer questions based on the content of the Consumer Protection Law.

- Do not answer questions unrelated to the law.

Here is a generic RAG prompt template provided by LangChain:

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: {question}

Context: {context}

Answer:Translation in Chinese:

你是问答任务的助手。使用以下检索到的上下文来回答问题。如果你不知道答案,就说你不知道。最多使用三个句子并保持答案简洁。 问题: {question} 上下文: {context} 答案:

The template includes two input variables: question and context. The question is the user's query, and the context is the relevant information retrieved from the vector database.

LangChain provides the PromptTemplate class to construct the final prompt, so we need to instantiate a PromptTemplate object using the above template string.

python

from langchain.prompts.prompt import PromptTemplate

prompt_template_str = """

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: {question}

Context: {context}

Answer:

"""

prompt_template = PromptTemplate.from_template(prompt_template_str)Data Retrieval

Next, we need to consider how to populate the context in the prompt template.

In the data processing section, we used OpenAIEmbeddings to vectorize the data and store it in Chroma. When querying the data, we also need to instantiate a Chroma object wrapped by LangChain, specifying the data storage location and embedding method.

python

from langchain_community.vectorstores import Chroma

from langchain_openai import OpenAIEmbeddings

vectorstore = Chroma(persist_directory="./chroma_db", embedding_function=OpenAIEmbeddings())LangChain has a BaseRetriever class for data retrieval, which standardizes the retrieval interface. The above Chroma object can obtain a retriever instance using the as_retriever method.

python

retriever = vectorstore.as_retriever(search_type="similarity", search_kwargs={"k": 4})search_type: Specifies the type of search, wheresimilarityindicates a similarity search.search_kwargs: Search options, wherek: 4means that the top 4 matching documents are returned for each query.

python

docs = retriever.invoke("How to resolve disputes?")

len(docs)

#> 4Creating the Q&A Chain

Now that everything is ready, we can link the above components together to build our Q&A chain. The final code for creating the chain is as follows:

python

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt_template

| llm

| StrOutputParser()

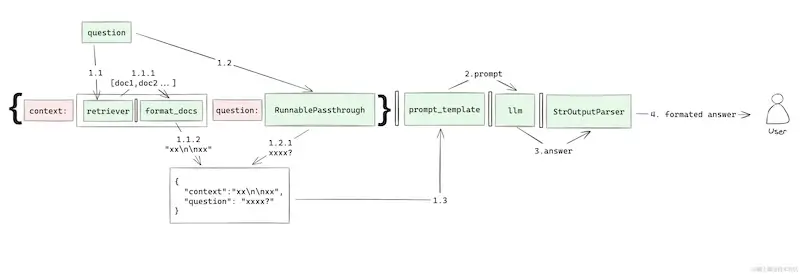

)The pipe-like syntax may seem confusing at first, so let's use the diagram below to explain the execution process of the entire chain:

- The

retrieveris the first step in the chain, so the user's question is passed to the retriever to query the vector database, which returns a list of documents. - The list is then passed to

format_docs, converting it into a string format acceptable by the LLM and assigning it tocontext. RunnablePassthroughrepresents the output of the previous step. Since it is the first step,RunnablePassthrough()will receive the user's question and assign it to thequestionvariable.- Now, we have obtained the two variables required by the prompt template.

We use these two variables and the prompt template to construct the final prompt, pass it to the LLM, and then the LLM generates the answer. The answer is passed to the output parser StrOutputParser, which formats the answer before providing the final result.

Deploying the LLM Application

Finally, we can use LangServe to deploy and publish the LLM application for users to call.

python

from fastapi import FastAPI

from langserve import add_routes

app = FastAPI(

title="Consumer Rights Intelligent Assistant",

version="1.0",

)

# 3. Adding chain route

add_routes(

app,

rag_chain,

path="/consumer_ai",

)

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="localhost", port=8000)In the code above, the web service listens on localhost, meaning it is accessible only from the local machine. If the service needs to be accessible publicly, the host should be changed to the server's public IP.

Here is the complete code for the Q&A bot bot.py:

python

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_community.vectorstores import Chroma

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain.prompts.prompt import PromptTemplate

from fastapi import FastAPI

from langserve import add_routes

vectorstore = Chroma(persist_directory="./chroma_db", embedding_function=OpenAIEmbeddings())

retriever = vectorstore.as_retriever(search_type="similarity", search_kwargs={"k": 4})

prompt_template_str = """

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: {question}

Context: {context}

Answer:

"""

prompt_template = PromptTemplate.from_template(prompt_template_str)

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt_template

| llm

| StrOutputParser()

)

app = FastAPI(

title="Consumer Rights Intelligent Assistant",

version="1.0",

)

# 3. Adding chain route

add_routes(

app,

rag_chain,

path="/consumer_ai",

)

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="localhost", port=8000)Now, let's run the service to see the effect by executing pdm run bot.py:

# pdm run bot.py

LANGSERVE: Playground for chain "/consumer_ai/" is live at:

LANGSERVE: │

LANGSERVE: └──> /consumer_ai/playground/

LANGSERVE:

LANGSERVE: See all available routes at /docs/

INFO: Application startup complete.

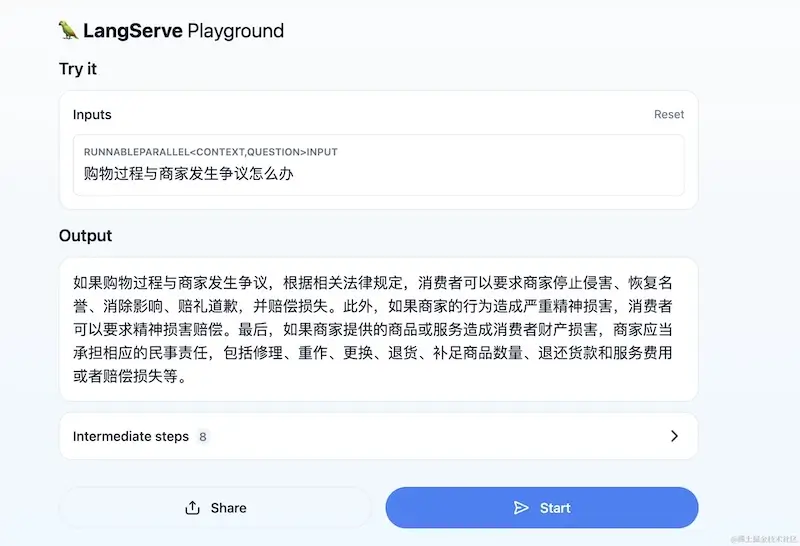

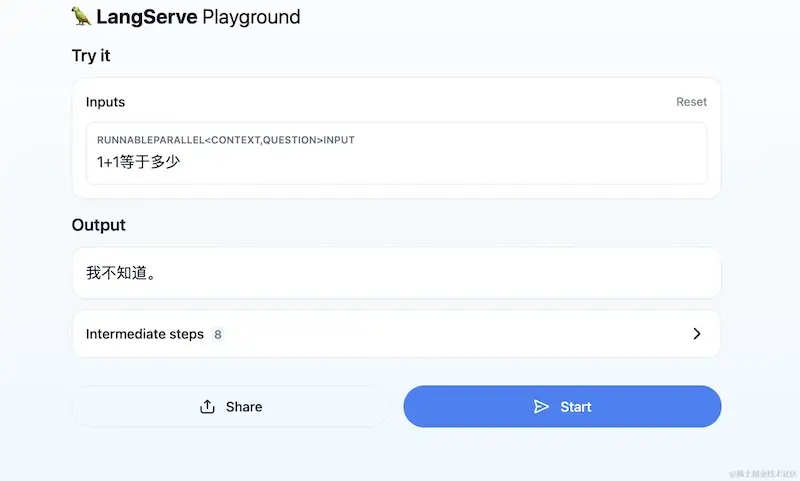

INFO: Uvicorn running on http://localhost:8000 (Press CTRL+C to quit)Visit http://localhost:8000/consumer_ai/playground/ in your browser:

Expanding the intermediate steps, you can see the execution process of the chain.

If a question unrelated to the document content is entered, the bot will refuse to answer.

Summary

Today, we developed a real-world Q&A bot from scratch. From data loading and storage to LLM invocation, LangChain provides an "all-in-one solution" with a total of fewer than 100 lines of code. This example showcases the power and charm of LangChain.