Appearance

Document Summarization

In this era of information overload, we face an influx of textual materials daily—ranging from complex papers and legal texts to business analysis reports in the workplace. Extracting key information from these dense documents has become an essential skill, often requiring substantial time and effort.

The rise of LLMs has opened new possibilities for automatically summarizing long documents. We can leverage the excellent text comprehension and generation capabilities of LLMs for more efficient document summarization.

Today, we will explore common technical details and pros and cons of several LLM summarization techniques in conjunction with LangChain, so that users can make better choices in practical applications.

In this code demonstration, I will use a PDF of Ren Zhengfei's 2018 public speech as the document to be summarized. The PDF content has been uploaded to GitHub, which you can download from here.

LangChain Document Summarization Chain Code Design

LangChain supports three modes for summarizing documents: Stuff, Refine, and Map-Reduce. We will detail these modes in the following sections, but first, let's understand how LangChain organizes and utilizes its code.

LangChain has developed separate chains for each summarization mode: StuffDocumentsChain, RefineDocumentsChain, and MapReduceDocumentsChain. However, these chains are not typically used directly. Instead, LangChain provides the load_summarize_chain method, allowing users to specify the desired chain type to create the corresponding summarization chain.

python

def load_summarize_chain(

llm: BaseLanguageModel,

chain_type: str = "stuff",

verbose: Optional[bool] = None,

**kwargs: Any,

) -> BaseCombineDocumentsChain:

loader_mapping: Mapping[str, LoadingCallable] = {

"stuff": _load_stuff_chain,

"map_reduce": _load_map_reduce_chain,

"refine": _load_refine_chain,

}

...

return loader_mapping[chain_type](llm, verbose=verbose, **kwargs)As seen here, the method calls different _load_xxx_chain methods based on the provided chain_type, initializing the corresponding summarization chain.

This design facilitates the specific initialization of each chain. For example, in the _load_stuff_chain method:

python

def _load_stuff_chain(

llm: BaseLanguageModel,

prompt: BasePromptTemplate = stuff_prompt.PROMPT,

document_variable_name: str = "text",

verbose: Optional[bool] = None,

**kwargs: Any,

) -> StuffDocumentsChain:

llm_chain = LLMChain(llm=llm, prompt=prompt, verbose=verbose)

return StuffDocumentsChain(

llm_chain=llm_chain,

document_variable_name=document_variable_name,

verbose=verbose, # type: ignore[arg-type]

**kwargs,

)Since StuffDocumentsChain is designed to receive an LLMChain, the _load_stuff_chain method generates an LLMChain using the passed llm and prompt for subsequent initialization.

Now, let's delve into the various approaches for LLM document summarization.

Stuff Summarization

Stuff is the simplest and most intuitive summarization method, where the entire document is passed to the LLM as context.

In LangChain, StuffDocumentsChain uses the following prompt by default for summarization:

Write a concise summary of the following:

"{text}"

CONCISE SUMMARY:This straightforward approach works well for shorter documents, like single web pages or brief papers. Here’s an example of its summarization effectiveness:

python

from langchain.chains.summarize import load_summarize_chain

from langchain_core.prompts import PromptTemplate

from langchain_community.document_loaders import PyPDFLoader

# Load document page by page

loader = PyPDFLoader("任正非2018.pdf")

docs = loader.load()

prompt_template = """Write a concise summary of the following:

"{text}"

ANSWER IN THE TEXT ORIGINAL LANGUAGE!

CONCISE SUMMARY:"""

prompt = PromptTemplate(template=prompt_template, input_variables=["text"])

stuff_chain = load_summarize_chain(llm=ChatOpenAI(), chain_type="stuff", prompt=prompt)

# Summarize the first page content

print(stuff_chain.invoke(docs[:1])["output_text"])The output summarizes key points from Ren Zhengfei’s speech, but when attempting to summarize multiple pages at once, a token limit error can occur:

openai.BadRequestError: Error code: 400 - {'error': {'message': "This model's maximum context length is 4097 tokens...}}This limitation is a significant drawback of the Stuff summarization method, making it suitable only for shorter documents. Although advancements in context window lengths may extend this "short" limit, there are still challenges. For example, when documents are lengthy, LLMs may lose critical information from the middle, leading to less coherent summaries.

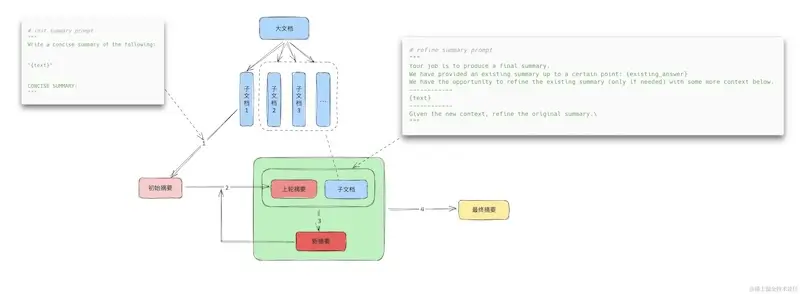

Refine Iterative Summarization

Refine is a more complex summarization technique designed to address the limitations of the Stuff method for large documents. The idea is to split a large document into smaller chunks, summarize the first chunk, and then combine this summary with the next chunk, iterating until all chunks have contributed to the final summary.

Here’s how it works in practice:

python

from langchain.chains.summarize import load_summarize_chain

from langchain_community.document_loaders import PyPDFLoader

# Load document page by page

loader = PyPDFLoader("任正非2018.pdf")

docs = loader.load()

refine_chain = load_summarize_chain(llm=ChatOpenAI(model_name="gpt-3.5-turbo-1106"), input_key="input_documents", chain_type="refine")

print(refine_chain({"input_documents": docs[:10]}, return_only_outputs=True))The output summarizes points from a 10-page PDF. While this method avoids the issues of processing long texts at once, the effectiveness of the final summary can depend heavily on the quality of the intermediate summaries. If a middle summary is poorly generated, it can negatively impact the overall result.

Moreover, using the default prompts may sometimes lead to repetitive or unhelpful outputs. Therefore, fine-tuning the refine_prompt based on the document type and business context is essential. Additionally, the Refine approach can be time-consuming, requiring multiple calls to the LLM in a sequential manner.

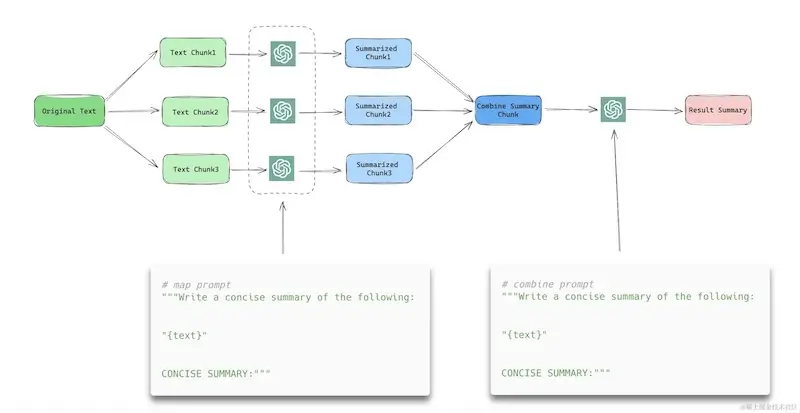

Map-Reduce Summarization

Map-Reduce is a widely used LLM summarization technique. Similar to Refine, it begins by splitting a large document into smaller chunks, then concurrently passes each chunk to the LLM to generate brief summaries. Finally, these summaries are combined and sent back to the LLM to produce a comprehensive document summary.

Workflow Overview

The workflow involves the following steps:

- Split the document into smaller chunks.

- Generate summaries for each chunk in parallel.

- Merge these summaries into a final summary.

By default, LangChain uses the same prompt for both generating chunk summaries and summarizing the final result.

Here’s an example using the Map-Reduce method:

python

from langchain.chains.summarize import load_summarize_chain

from langchain_community.document_loaders import PyPDFLoader

# Load document page by page

loader = PyPDFLoader("任正非2018.pdf")

docs = loader.load()

map_reduce_chain = load_summarize_chain(llm=ChatOpenAI(model_name="gpt-3.5-turbo-1106"), input_key="input_documents", chain_type="map_reduce")

print(map_reduce_chain({"input_documents": docs[:10]}, return_only_outputs=True))The output summarizes the first 10 pages effectively. The Map-Reduce method is generally faster than Refine due to its parallel processing of multiple chunks, significantly improving efficiency.

Considerations

The effectiveness of the Map-Reduce method largely depends on how well the document is split. Poorly split chunks can lead to fragmented information, making it difficult to create a coherent summary even in the “reduce” step. Therefore, it’s crucial to ensure that each chunk contains relatively independent and complete information to maintain the quality of the final summary.

Summary

Today, we covered three methods for document summarization using LLMs: Stuff, Refine, and Map-Reduce.

- Stuff: Simple and convenient, best suited for short documents.

- Refine: More complex, ideal for dynamic documents requiring iterative refinement.

- Map-Reduce: Efficient for batch summarization of multiple independent documents.

Each method has its pros and cons, so selecting the appropriate one depends on specific needs.

Additionally, there are many other approaches to document summarization beyond those discussed today. For example, both Refine and Map-Reduce involve frequent LLM calls, which can be time-consuming and costly. A new method discussed on Twitter involves K-Means clustering for document summarization.

K-Means Clustering Approach

This approach works as follows:

- Split documents into smaller chunks and embed them as vectors.

- Use K-Means clustering to group similar vectors into clusters.

- Identify representative vectors from each cluster and summarize them to generate the final summary.

By selecting the most representative chunks for summarization, this method can produce comprehensive summaries while minimizing LLM calls.