Appearance

Stream Module

The stream module in Node.js is designed to handle "streams" of data, allowing efficient reading and writing of data in a sequential manner, similar to water flowing through pipes.

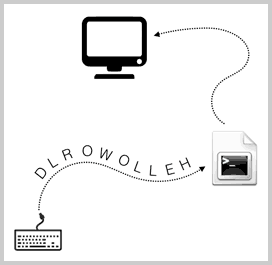

What is a Stream?

A stream is an abstract data structure where data flows continuously from a source to a destination. For example, keyboard input is a stream of characters (standard input), and output to the display is another stream (standard output). Streams are ordered, and you read or write data sequentially rather than randomly.

Reading Data with Streams

To read data from a file stream, use the createReadStream method:

javascript

import { createReadStream } from 'node:fs';

let rs = createReadStream('sample.txt', 'utf-8');

rs.on('data', (chunk) => {

console.log('---- chunk ----');

console.log(chunk);

});

rs.on('end', () => {

console.log('---- end ----');

});

rs.on('error', err => {

console.log(err);

});Here, the data event may trigger multiple times, sending chunks of data until the end event signals no more data is available.

Writing Data with Streams

To write data to a file stream, use createWriteStream:

javascript

import { createWriteStream } from 'node:fs';

let ws = createWriteStream('output.txt', 'utf-8');

ws.write('Using Stream to write text data...\n');

ws.write('Continuing to write...\n');

ws.write('DONE.\n');

ws.end(); // Finish writingFor binary data, convert it to a Buffer before writing:

javascript

let b64array = [ /* Base64 data */ ];

let ws2 = createWriteStream('output.png');

for (let b64 of b64array) {

let buf = Buffer.from(b64, 'base64');

ws2.write(buf);

}

ws2.end(); // Finish writingPiping Streams

You can connect a readable stream to a writable stream using the pipe method:

javascript

rs.pipe(ws);The pipeline function provides a robust way to handle multiple streams, including transformation:

javascript

import { pipeline } from 'node:stream/promises';

async function copy(src, dest) {

let rs = createReadStream(src);

let ws = createWriteStream(dest);

await pipeline(rs, ws);

}

copy('sample.txt', 'output.txt')

.then(() => console.log('Copied.'))

.catch(err => console.log(err));Using pipeline, you can easily add transformation streams, like gzip compression, to streamline data processing from input to output.